Hi,

it is a couple of weeks, since I started using docker. My number of containers has increased steadily and now, I have to think about the hosts memory and CPU utilisation.

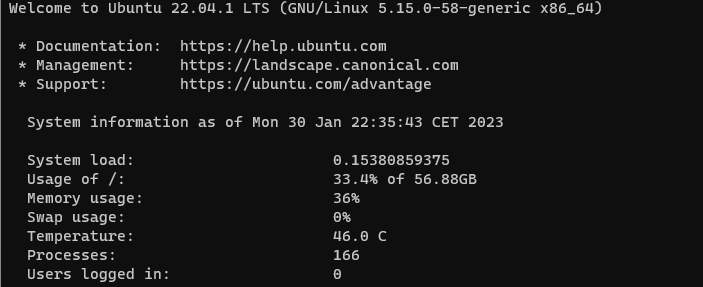

Host is an HP EliteDesk 800 G2 with 8GB of RAM. To be honest, I am not sure about the CPU. Docker is running on an Ubuntu 22.04.1 LTS server.

Today, I tested the Unifi Network Application as a docker container. This worked perfectly fine, but in portainer, the CPU-usage for this container spiked up to 250% (?) and then dropped to some small number. Does this make sense to anyone?

Is there a best practice on how to estimate the memory and CPU usage of containers and how to set the limits? What is to be expected, if a containers hits the limit? What will docker do, how will be container behave?

I started adding something like this to each container, but I am not sure on how much memory should be allocated to each container.

services:

...

deploy:

resources:

limits:

memory: 512mb

...

If my host is a multi-core system, would you limit the CPUs as well?

Some screenshots of my current system:

Any recommendation is very much appreciated.

Chris