Summary

Over the past year and a half I’ve been evolving my homelab to expand my knowledge of containerisation and to support my home network.

- Issue type

- Sanity check - have I configured this correctly?

- OS Version/build

- Debian 11.5 bullseye (or Raspberry Pi OS equivalent)

- App version

- Docker version 20.10.22, build 3a2c30b

- Docker Compose version v2.14.1

Environment

I have four servers with a minimal Linux installation and the docker engine and compose plugin, installed the approved way.

- Four servers running Debian 11.5 headless

- 2 x Edge servers : Raspberry Pi 4b RAM 2GB SSD 120GB

- 1 x Services server : Intel NUC7CJYHN RAM 16GB SSD 500GB

- 1 x Security server : Intel NUC8i3BEH RAM 32GB SSD 250GB

- Exclusive use of docker-compose.yml files for managing containers

- Configuration-as-code stored in private github repositories

- Server specific information held in .env.example files, .env files created before container start

Topology

My two edge servers provide local manual redundancy. All servers support specific functions plus common services.

- Two edge servers running:

- Services server running:

- Security server running:

- Common services running across all four servers:

- Plus other containers, but you get the idea

Evolution

I started with the default network and slowly expanded my knowledge. I then moved to custom bridge networking to have more control over the networking infrastructure. I have recently reconfigured my 4 servers to use an attachable overlay network. I have read articles and searched online to see if I can resolve the problem I am facing, and I feel I have not configured the network correctly so I am reaching out to the community to ask for a sanity check.

Issue

My DNS servers do no resolve my containers correctly.

Overlay Network Creation

The servers are as follows:

| IP Address | Hostname |

|---|---|

| 192.168.1.91 | id-edge1 |

| 192.168.1.92 | id-edge2 |

| 192.168.1.93 | id-security |

| 192.168.1.95 | id-services |

I created a custom overlay network using the following steps:

On Each Server

-

Stop Docker

sudo systemctl stop docker sudo systemctl stop docker.socket -

Delete interface

docker_gwbridgesudo ip link set docker_gwbridge down sudo ip link del dev docker_gwbridge -

Start Docker

sudo systemctl start docker -

Create docker_gwbridge bridge network

docker network create --subnet 172.28.0.0/24 --gateway 172.28.0.1 \ --opt com.docker.network.bridge.name=docker_gwbridge \ --opt com.docker.network.bridge.enable_ip_masquerade=true \ --opt com.docker.network.bridge.enable_icc=false \ docker_gwbridge

On first server

-

Create Swarm

docker swarm init --advertise-addr 192.168.1.91:2377 --listen-addr 0.0.0.0:2377 --default-addr-pool 172.28.1.0/24 --availability active docker info docker swarm join-token manager -

Remove the default ingress overlay network and create id-ingress overlay network

docker network rm ingress docker network create --driver overlay --subnet 172.28.1.0/24 --gateway 172.28.1.1 --ingress id-ingress -

Create id-overlay overlay network

docker network create --driver overlay --subnet 172.28.2.0/24 --gateway 172.28.2.1 --attachable id-overlay

On each other server

-

Join Hosts to Swarm

id-edge2

docker swarm join --advertise-addr 192.168.1.92:2377 --listen-addr 0.0.0.0:2377 --availability active --token [token from step 5] 192.168.1.91:2377id-security

docker swarm join --advertise-addr 192.168.1.93:2377 --listen-addr 0.0.0.0:2377 --availability active --token [token from step 5] 192.168.1.91:2377id-services

docker swarm join --advertise-addr 192.168.1.95:2377 --listen-addr 0.0.0.0:2377 --availability active --token [token from step 5] 192.168.1.91:2377id-edge1

docker node ls -

Review network details

At this point I have the following networks on each server:

docker network lsNAME DRIVER SCOPE bridge bridge local docker_gwbridge bridge local host host local id-ingress overlay swarm id-overlay overlay swarm none null local Reviewing the details of the three custom networks gives us the following details:

networks=("docker_gwbridge" "id-ingress" "id-overlay");for network in ${networks[@]};do docker network inspect $network --format="{{.Name}},{{.Scope}},{{.Driver}},{{range .IPAM.Config}}{{.Subnet}},{{.Gateway}},{{end}}{{.Internal}},{{.Attachable}},{{.Ingress}}";doneName Driver Scope Subnet Gateway Internal Attachable Ingress docker_gwbridge bridge local 172.28.0.0/24 172.28.0.1 false false false id-ingress overlay swarm 172.28.1.0/24 172.28.1.1 false false true id-overlay overlay swarm 172.28.2.0/24 172.28.2.1 false true false The Bridge is on 172.28.0.1. The Ingress is on 172.28.1. The Overlay is on 8172.28.2*.

The

docker_gwbridgelink is present on each server as well:

$ ip link show docker_gwbridge

354: docker_gwbridge: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default

link/ether 02:42:92:2e:95:72 brd ff:ff:ff:ff:ff:ff

$ ip address show docker_gwbridge

354: docker_gwbridge: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:92:2e:95:72 brd ff:ff:ff:ff:ff:ff

inet 172.28.0.1/24 brd 172.28.0.255 scope global docker_gwbridge

valid_lft forever preferred_lft forever

inet6 fe80::42:92ff:fe2e:9572/64 scope link

valid_lft forever preferred_lft forever

Adjust docker compose template

For each docker-compose.yml file that was to use the new id-overlay network, the format was adjusted to reflect this example:

version: '3'

services:

uptime-kuma:

image: louislam/uptime-kuma:latest

container_name: ${CONTAINER_NAME}

hostname: ${CONTAINER_NAME}.${HOSTNAME}

dns: ${DNS}

ports:

- 3001:3001 # web ui

volumes:

- ${APPDATA}:/app/data

networks:

id-overlay:

ipv4_address: ${IPV4_ADDRESS}

restart: unless-stopped

networks:

id-overlay:

name: id-overlay

external: true

Here is the associated .env.example file:

# Host specifics

CONTAINER_NAME=uptime-kuma

HOSTNAME=[id-edge1 or id-edge2]

DNS=192.168.1.1

IPV4_ADDRESS=[172.28.2.51 or 172.28.2.53]

# Directory locations

APPDATA=/srv/uptime-kuma/data

# Container specifics

#none

Every compose and environment file was updated and each container was recreated.

Inspecting the containers with this command:

docker inspect --format="{{.Name}},{{.HostConfig.NetworkMode}},{{range .NetworkSettings.Networks}}{{.IPAddress}},{{end}}{{range .HostConfig.Dns}}{{.}},{{end}}{{.Config.Hostname}},{{.Config.Domainname}},{{.Config.User}},{{range .NetworkSettings.Networks}}{{.IPAddress}},{{.Gateway}},{{end}},{{range .NetworkSettings.Networks}}{{.Aliases}}{{end}}" --type=container $(docker ps --all --quiet)

| sort --numeric-sort --field-separator=. --key=3,3 --key 4,4 | column -t -s ','

Gave the following results:

| Name | Network | IPv4 | Gateway | Hostname | Domain | User | IP Addr | Aliases |

|---|---|---|---|---|---|---|---|---|

| /duckdns | id-overlay | 172.28.2.11 | 192.168.1.1 | duckdns.id-edge1 | 172.28.2.11 | [duckdns duckdns 346e7144f378 duckdns.id-edge1] | ||

| /pihole | id-overlay | 172.28.2.21 | 127.0.0.1 | pihole.id-edge1 | 172.28.2.21 | [pihole pihole 4d9a0d90e771 pihole.id-edge1] | ||

| /wireguard | id-overlay | 172.28.2.31 | 192.168.1.1 | wireguard.id-edge1 | 172.28.2.31 | [wireguard wireguard 2f8375c803ee wireguard.id-edge1] | ||

| /npm_app | id-overlay | 172.28.2.41 | 192.168.1.1 | npm_app.id-edge1 | 172.28.2.41 | [npm_app app 1cc6fdf20eb5 npm_app.id-edge1] | ||

| /npm_db | id-overlay | 172.28.2.46 | 192.168.1.1 | npm_db.id-edge1 | 172.28.2.46 | [npm_db db 1f0f658752f2 npm_db.id-edge1] | ||

| /uptime-kuma | id-overlay | 172.28.2.51 | 192.168.1.1 | uptime-kuma.id-edge1 | 172.28.2.51 | [uptime-kuma uptime-kuma a2514dc7670e uptime-kuma.id-edge1] | ||

| /promtail | id-overlay | 172.28.2.221 | 192.168.1.1 | promtail.id-edge1 | 0:0 | 172.28.2.221 | [promtail promtail 141630167ad5 promtail.id-edge1] | |

| /netdata | id-overlay | 172.28.2.231 | 192.168.1.1 | netdata.id-edge1 | 172.28.2.231 | [netdata netdata cbbafc1de616 netdata.id-edge1] | ||

| /diun | id-overlay | 172.28.2.241 | 192.168.1.1 | diun.id-edge1 | 172.28.2.241 | [diun diun 7f4cd8a179e7 diun.id-edge1] | ||

| /duckdns | id-overlay | 172.28.2.13 | 192.168.1.1 | duckdns.id-edge2 | 172.28.2.13 | [duckdns duckdns 67969de2dc86 duckdns.id-edge2] | ||

| /pihole | id-overlay | 172.28.2.23 | 127.0.0.1 | pihole.id-edge2 | 172.28.2.23 | [pihole pihole b29676c9da81 pihole.id-edge2] | ||

| /wireguard | id-overlay | 172.28.2.33 | 192.168.1.1 | wireguard.id-edge2 | 172.28.2.33 | [wireguard wireguard 49dec076e51c wireguard.id-edge2] | ||

| /npm_app | id-overlay | 172.28.2.43 | 192.168.1.1 | npm_app.id-edge2 | 172.28.2.43 | [npm_app app 488ee3ab3444 npm_app.id-edge2] | ||

| /npm_db | id-overlay | 172.28.2.48 | 192.168.1.1 | npm_db.id-edge2 | 172.28.2.48 | [npm_db db 7d2ae64fc81c npm_db.id-edge2] | ||

| /uptime-kuma | id-overlay | 172.28.2.53 | 192.168.1.1 | uptime-kuma.id-edge2 | 172.28.2.53 | [uptime-kuma uptime-kuma 0de9f6242047 uptime-kuma.id-edge2] | ||

| /promtail | id-overlay | 172.28.2.223 | 192.168.1.1 | promtail.id-edge2 | 0:0 | 172.28.2.223 | [promtail promtail 5ebfb439fdd9 promtail.id-edge2] | |

| /netdata | id-overlay | 172.28.2.233 | 192.168.1.1 | netdata.id-edge2 | 172.28.2.233 | [netdata netdata f9f7c1f0b255 netdata.id-edge2] | ||

| /diun | id-overlay | 172.28.2.243 | 192.168.1.1 | diun.id-edge2 | 172.28.2.243 | [diun diun 898b634c8a6d diun.id-edge2] | ||

| /homer | id-overlay | 172.28.2.151 | 192.168.1.1 | homer.id-services | 1000:1000 | 172.28.2.151 | [homer homer f110a97f1da4 homer.id-services] | |

| /static-web-server | id-overlay | 172.28.2.156 | 192.168.1.1 | static-web-server.id-services | 172.28.2.156 | [static-web-server static-web-server 408b2baad370 static-web-server.id-services] | ||

| /homeassistant | id-overlay | 172.28.2.161 | 192.168.1.1 | homeassistant.id-services | 172.28.2.161 | [homeassistant homeassistant a598d04f096f homeassistant.id-services] | ||

| /mqtt | id-overlay | 172.28.2.163 | 192.168.1.1 | mqtt.id-services | 172.28.2.163 | [mqtt mosquitto 639f66494909 mqtt.id-services] | ||

| /node-red | id-overlay | 172.28.2.165 | 192.168.1.1 | node-red.id-services | node-red | 172.28.2.165 | [node-red node-red 0e16d5b2a8a3 node-red.id-services] | |

| /zwave-js-ui | id-overlay | 172.28.2.167 | 192.168.1.1 | zwave-js-ui.id-services | 172.28.2.167 | [zwave-js-ui zwave-js-ui 50b1a055e9eb zwave-js-ui.id-services] | ||

| /prometheus | id-overlay | 172.28.2.171 | 192.168.1.1 | prometheus.id-services | nobody | 172.28.2.171 | [prometheus prometheus 4265f248d280 prometheus.id-services] | |

| /loki | id-overlay | 172.28.2.173 | 192.168.1.1 | loki.id-services | 1000:1000 | 172.28.2.173 | [loki loki a2ca15abb198 loki.id-services] | |

| /grafana | id-overlay | 172.28.2.175 | 192.168.1.1 | grafana.id-services | 472 | 172.28.2.175 | [grafana grafana 34172224b364 grafana.id-services] | |

| /smartthings-metrics | id-overlay | 172.28.2.177 | 192.168.1.1 | smartthings-metrics.id-services | 172.28.2.177 | [smartthings-metrics smartthings-metrics b26e84bc5fe7 smartthings-metrics.id-services] | ||

| /alertmanager | id-overlay | 172.28.2.179 | 192.168.1.1 | alertmanager.id-services | nobody | 172.28.2.179 | [alertmanager alertmanager 52b3b4b03380 alertmanager.id-services] | |

| /teslamate | id-overlay | 172.28.2.181 | 192.168.1.1 | teslamate.id-edge1 | nonroot:nonroot | 172.28.2.181 | [teslamate teslamate dc01ba5e5b21 teslamate.id-edge1] | |

| /teslamate_database | id-overlay | 172.28.2.183 | 192.168.1.1 | teslamate_database.id-edge1 | 172.28.2.183 | [teslamate_database database eb7e3f48731f teslamate_database.id-edge1] | ||

| /teslamate_grafana | id-overlay | 172.28.2.185 | 192.168.1.1 | teslamate_grafana.id-edge1 | grafana | 172.28.2.185 | [teslamate_grafana grafana 03b730bbff93 teslamate_grafana.id-edge1] | |

| /nextcloud | id-overlay | 172.28.2.191 | 192.168.1.1 | nextcloud.id-services | 172.28.2.191 | [nextcloud nextcloud e8ac698eaff6 nextcloud.id-services] | ||

| /nextcloud_mysql | id-overlay | 172.28.2.193 | 192.168.1.1 | nextcloud_mysql.id-services | 172.28.2.193 | [nextcloud_mysql mysql 7cefeb355842 nextcloud_mysql.id-services] | ||

| /grocy | id-overlay | 172.28.2.196 | 192.168.1.1 | grocy.id-services | 172.28.2.196 | [grocy grocy 29731909104f grocy.id-services] | ||

| /promtail | id-overlay | 172.28.2.225 | 192.168.1.1 | promtail.id-services | 0:0 | 172.28.2.225 | [promtail promtail 9efcfd7c893b promtail.id-services] | |

| /netdata | id-overlay | 172.28.2.235 | 192.168.1.1 | netdata.id-services | 172.28.2.235 | [netdata netdata ce0d12bd6697 netdata.id-services] | ||

| /diun | id-overlay | 172.28.2.245 | 192.168.1.1 | diun.id-services | 172.28.2.245 | [diun diun 243a785eb467 diun.id-services] | ||

| /wyze-bridge | id-overlay | 172.28.2.201 | 192.168.1.1 | wyze-bridge.id-security | 172.28.2.201 | [wyze-bridge wyze-bridge 406df8e4e703 wyze-bridge.id-security] | ||

| /frigate | id-overlay | 172.28.2.203 | 192.168.1.1 | frigate.id-security | 172.28.2.203 | [frigate frigate 8f6d716dba58 frigate.id-security] | ||

| /ispyagentdvr | id-overlay | 172.28.2.205 | 192.168.1.1 | ispyagentdvr.id-security | 172.28.2.205 | [ispyagentdvr ispyagentdvr 77797cdb530f ispyagentdvr.id-security] | ||

| /promtail | id-overlay | 172.28.2.227 | 192.168.1.1 | promtail.id-security | 0:0 | 172.28.2.227 | [promtail promtail ded9ac6f62a0 promtail.id-security] | |

| /netdata | id-overlay | 172.28.2.237 | 192.168.1.1 | netdata.id-security | 172.28.2.237 | [netdata netdata 92df8b1423a3 netdata.id-security] | ||

| /diun | id-overlay | 172.28.2.247 | 192.168.1.1 | diun.id-security | 172.28.2.247 | [diun diun a924caa8efef diun.id-security] |

Testing Network Connectivity

The next step is to test how the containers see the network and how the network views the containers.

- Router is at 192.168.1.1

- DNS1 is 192.168.1.91 - id-edge1, pi-hole

- DNS2 is 192.168.1.92 - id-edge2, pi-hole

- Container DNS is set in compose files

- Almost all use 192.168.1.1

- The two piholes use 127.0.0.1

- This appears to be best practice according to the pihole forums and dnsmasq.d research

- pi-hole configuration is adjusted

- FTLCONF_LOCAL_IPV4 is set to either 192.168.1.91 or 192.168.1.92 depending on server

- DNSMASQ_LISTENING is set to all to allow any interface

- Volume

/etc/pihole/is mounted locally with acustom.listfile that has hosts entries for entire network - Volume

/etc/dnsmasq.d/is mounted locally with a07-id-domains.conffile that has the following entries:

address=/id-edge1/192.168.1.91

address=/id-edge2/192.168.1.92

address=/id-security/192.168.1.93

address=/id-services/192.168.1.95

resolv.conf

The command:

echo "Host";cat /etc/resolv.conf;containers=$(docker ps --all --quiet);for container in $containers;do containerdisplay=$(docker inspect --type=container --format="{{.Name}}" $cont

ainer);echo -e "\nContainer $containerdisplay";docker exec $container cat /etc/resolv.conf;done

The output:

Output: resolv.conf

Host

# Generated by resolvconf

nameserver 192.168.1.1

Container /pihole

nameserver 127.0.0.11

options ndots:0

Container /promtail

nameserver 127.0.0.11

options ndots:0

Container /wireguard

nameserver 127.0.0.11

options ndots:0

Container /uptime-kuma

nameserver 127.0.0.11

options ndots:0

Container /npm_app

nameserver 127.0.0.11

options ndots:0

Container /npm_db

nameserver 127.0.0.11

options ndots:0

Container /netdata

nameserver 127.0.0.11

options ndots:0

Container /duckdns

nameserver 127.0.0.11

options ndots:0

Container /diun

nameserver 127.0.0.11

options ndots:0

The analysis:

- Each container is using the docker networking ip address for nameserver resolution

host ping tests

The command:

ping -c 1 id-edge1;ping_targets=("id-edge1" "netdata.id-edge1" "netdata.id-edge2" "netdata.id-services" "netdata.id-security" "nordlynx.id-services");for ping_target in ${ping_targets[@]};do ping -c 1 $ping_target;done

The output:

Output: host ping

PING id-edge1 (127.0.1.1) 56(84) bytes of data.

64 bytes from id-edge1 (127.0.1.1): icmp_seq=1 ttl=64 time=0.125 ms

--- id-edge1 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.125/0.125/0.125/0.000 ms

PING id-edge1 (127.0.1.1) 56(84) bytes of data.

64 bytes from id-edge1 (127.0.1.1): icmp_seq=1 ttl=64 time=0.125 ms

--- id-edge1 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.125/0.125/0.125/0.000 ms

PING netdata.id-edge1 (192.168.1.91) 56(84) bytes of data.

64 bytes from id-edge1 (192.168.1.91): icmp_seq=1 ttl=64 time=0.122 ms

--- netdata.id-edge1 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.122/0.122/0.122/0.000 ms

PING netdata.id-edge2 (192.168.1.92) 56(84) bytes of data.

64 bytes from id-edge2 (192.168.1.92): icmp_seq=1 ttl=64 time=0.231 ms

--- netdata.id-edge2 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.231/0.231/0.231/0.000 ms

PING netdata.id-services (192.168.1.95) 56(84) bytes of data.

64 bytes from id-services (192.168.1.95): icmp_seq=1 ttl=64 time=0.258 ms

--- netdata.id-services ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.258/0.258/0.258/0.000 ms

PING netdata.id-security (192.168.1.93) 56(84) bytes of data.

64 bytes from id-security (192.168.1.93): icmp_seq=1 ttl=64 time=0.234 ms

--- netdata.id-security ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.234/0.234/0.234/0.000 ms

PING nordlynx.id-services (192.168.1.95) 56(84) bytes of data.

64 bytes from id-services (192.168.1.95): icmp_seq=1 ttl=64 time=0.244 ms

--- nordlynx.id-services ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.244/0.244/0.244/0.000 ms

The analysis:

- Pinging the host or the containers from any of servers resolves to the bare metal interface address

container ping tests

The command:

ping_targets=("netdata.id-edge1" "netdata.id-edge2" "netdata.id-services" "netdata.id-security");for ping_target in ${ping_targets[@]};do docker exec netdata ping -c 1 $ping_target;done

The output:

Output: container ping

PING netdata.id-edge1 (172.28.2.231): 56 data bytes

64 bytes from 172.28.2.231: seq=0 ttl=64 time=0.229 ms

--- netdata.id-edge1 ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss

round-trip min/avg/max = 0.229/0.229/0.229 ms

PING netdata.id-edge2 (172.28.2.233): 56 data bytes

64 bytes from 172.28.2.233: seq=0 ttl=64 time=0.492 ms

--- netdata.id-edge2 ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss

round-trip min/avg/max = 0.492/0.492/0.492 ms

PING netdata.id-services (172.28.2.235): 56 data bytes

64 bytes from 172.28.2.235: seq=0 ttl=64 time=0.619 ms

--- netdata.id-services ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss

round-trip min/avg/max = 0.619/0.619/0.619 ms

PING netdata.id-security (172.28.2.237): 56 data bytes

64 bytes from 172.28.2.237: seq=0 ttl=64 time=0.632 ms

--- netdata.id-security ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss

round-trip min/avg/max = 0.632/0.632/0.632 ms

The analysis:

- Pinging the containers from within the network predictably returns the container assigned IP Address

container dig tests

The command:

dig_targets=("netdata.id-edge1" "netdata.id-edge2" "netdata.id-services" "netdata.id-security" "nordlynx.id-services");for dig_target in ${dig_targets[@]};do docker exec pihole dig $dig_target a;done

The output:

"Output:

; <<>> DiG 9.16.33-Debian <<>> netdata.id-edge1 a

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 30577

;; flags: qr rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 0

;; QUESTION SECTION:

;netdata.id-edge1. IN A

;; ANSWER SECTION:

netdata.id-edge1. 600 IN A 172.28.2.231

;; Query time: 3 msec

;; SERVER: 127.0.0.11#53(127.0.0.11)

;; WHEN: Tue Jan 17 16:59:34 MST 2023

;; MSG SIZE rcvd: 66

; <<>> DiG 9.16.33-Debian <<>> netdata.id-edge2 a

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 64329

;; flags: qr rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 0

;; QUESTION SECTION:

;netdata.id-edge2. IN A

;; ANSWER SECTION:

netdata.id-edge2. 600 IN A 172.28.2.233

;; Query time: 0 msec

;; SERVER: 127.0.0.11#53(127.0.0.11)

;; WHEN: Tue Jan 17 16:59:34 MST 2023

;; MSG SIZE rcvd: 66

; <<>> DiG 9.16.33-Debian <<>> netdata.id-services a

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 59183

;; flags: qr rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 0

;; QUESTION SECTION:

;netdata.id-services. IN A

;; ANSWER SECTION:

netdata.id-services. 600 IN A 172.28.2.235

;; Query time: 3 msec

;; SERVER: 127.0.0.11#53(127.0.0.11)

;; WHEN: Tue Jan 17 16:59:34 MST 2023

;; MSG SIZE rcvd: 72

; <<>> DiG 9.16.33-Debian <<>> netdata.id-security a

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 47200

;; flags: qr rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 0

;; QUESTION SECTION:

;netdata.id-security. IN A

;; ANSWER SECTION:

netdata.id-security. 600 IN A 172.28.2.237

;; Query time: 0 msec

;; SERVER: 127.0.0.11#53(127.0.0.11)

;; WHEN: Tue Jan 17 16:59:35 MST 2023

;; MSG SIZE rcvd: 72

; <<>> DiG 9.16.33-Debian <<>> nordlynx.id-services a

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 59261

;; flags: qr aa rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;nordlynx.id-services. IN A

;; ANSWER SECTION:

nordlynx.id-services. 0 IN A 192.168.1.95

;; Query time: 3 msec

;; SERVER: 127.0.0.11#53(127.0.0.11)

;; WHEN: Tue Jan 17 16:59:35 MST 2023

;; MSG SIZE rcvd: 65

The analysis:

- Using a pihole container with no nameserver specified returns the container ip addresses

The command:

dig_targets=("netdata.id-edge1" "netdata.id-edge2" "netdata.id-services" "netdata.id-security" "nordlynx.id-services");for dig_target in ${dig_targets[@]};do docker exec pihole dig @192.168.1.1 $dig_target a;done

The output:

"Output:

; <<>> DiG 9.16.33-Debian <<>> @192.168.1.1 netdata.id-edge1 a

; (1 server found)

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 8053

;; flags: qr aa rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;netdata.id-edge1. IN A

;; ANSWER SECTION:

netdata.id-edge1. 0 IN A 192.168.1.91

;; Query time: 0 msec

;; SERVER: 192.168.1.1#53(192.168.1.1)

;; WHEN: Tue Jan 17 16:59:42 MST 2023

;; MSG SIZE rcvd: 61

; <<>> DiG 9.16.33-Debian <<>> @192.168.1.1 netdata.id-edge2 a

; (1 server found)

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 3328

;; flags: qr aa rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;netdata.id-edge2. IN A

;; ANSWER SECTION:

netdata.id-edge2. 0 IN A 192.168.1.92

;; Query time: 3 msec

;; SERVER: 192.168.1.1#53(192.168.1.1)

;; WHEN: Tue Jan 17 16:59:42 MST 2023

;; MSG SIZE rcvd: 61

; <<>> DiG 9.16.33-Debian <<>> @192.168.1.1 netdata.id-services a

; (1 server found)

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 36468

;; flags: qr aa rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;netdata.id-services. IN A

;; ANSWER SECTION:

netdata.id-services. 0 IN A 192.168.1.95

;; Query time: 0 msec

;; SERVER: 192.168.1.1#53(192.168.1.1)

;; WHEN: Tue Jan 17 16:59:43 MST 2023

;; MSG SIZE rcvd: 64

; <<>> DiG 9.16.33-Debian <<>> @192.168.1.1 netdata.id-security a

; (1 server found)

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 58716

;; flags: qr aa rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;netdata.id-security. IN A

;; ANSWER SECTION:

netdata.id-security. 0 IN A 192.168.1.93

;; Query time: 3 msec

;; SERVER: 192.168.1.1#53(192.168.1.1)

;; WHEN: Tue Jan 17 16:59:43 MST 2023

;; MSG SIZE rcvd: 64

; <<>> DiG 9.16.33-Debian <<>> @192.168.1.1 nordlynx.id-services a

; (1 server found)

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 8279

;; flags: qr aa rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;nordlynx.id-services. IN A

;; ANSWER SECTION:

nordlynx.id-services. 0 IN A 192.168.1.95

;; Query time: 0 msec

;; SERVER: 192.168.1.1#53(192.168.1.1)

;; WHEN: Tue Jan 17 16:59:43 MST 2023

;; MSG SIZE rcvd: 65

The analysis:

- Using a pihole container with a nameserver specified returns the host ip addresses

The Big Issue

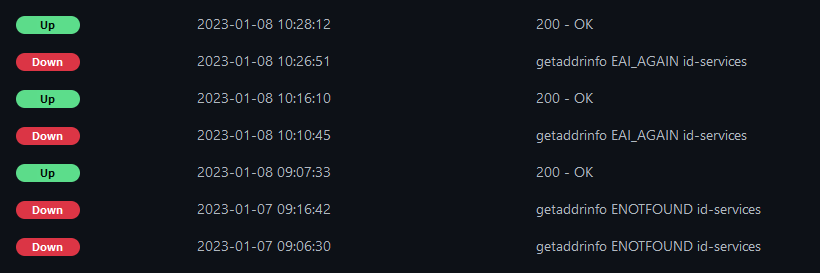

When using uptime-kuma on a target with a hostname, after a while the getaddrinfo EAI_AGAIN error message will appear:

This, along with the ECONNREFUSED message hints that my network configuration may not be correct.

There have been a few issues raised on the uptime-kuma repo, but I agree that this isn’t an issue with how uptime-kuma does business.

Questions

1. Are my three overlay network defined correctly?

2. Should I be overriding the resolv.conf settings in the Docker daemon?

3. Is there a fundamental issue with Docker networking that I am missing?

4. Is there a best practice for DNS settings in Docker when using a containerised DNS service (like pihole)?

5. Is there a best practice for DNS settings in Docker when using a containerised VPN service (like WireGuard)?

6. Should I set:

6.1. container_name to [container_name] and hostname to [container_name].[hostname] as I have now or

6.2. container_name to [container_name].[hostname] and hostname to [hostname]?

If you’ve read this far, thank you for your patience, and I hope you can help!