So, really, unless you’re trying to run Docker-in-Docker (it works, but is not likely the recommended thing to do for just accessing the docker client) your best bet is to install the docker CLIENT binaries into your container instead of the entire docker server stack.

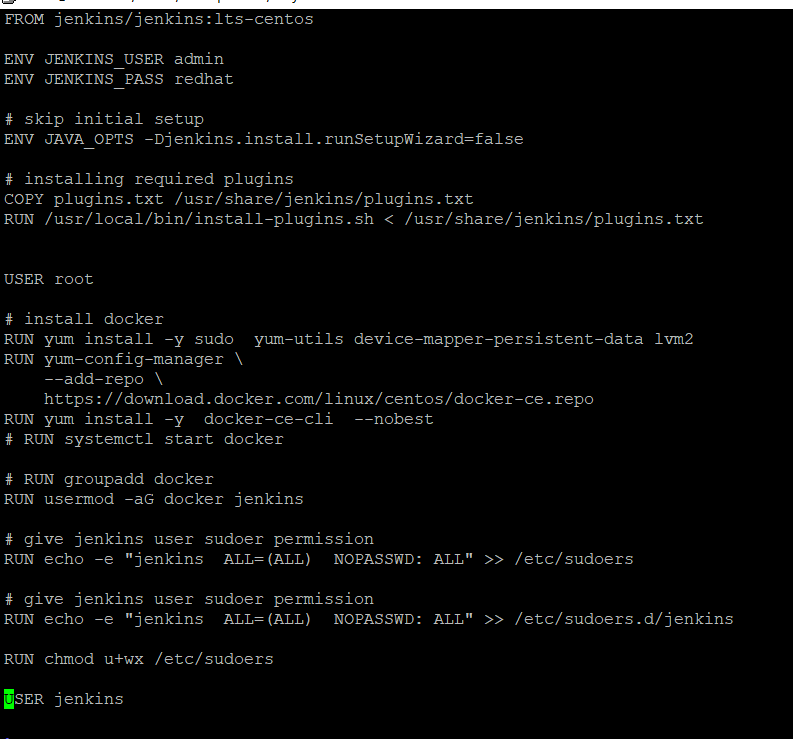

the “Run” Stanza for the Dockerfile:

ARG DOCKER_CLIENT=“docker-17.06.2-ce.tgz”

RUN cd /tmp/

&& curl -sSL -O https://download.docker.com/linux/static/stable/x86_64/${DOCKER_CLIENT} \

&& tar zxf ${DOCKER_CLIENT} \

&& mkdir -p /usr/local/bin \

&& mv ./docker/docker /usr/local/bin \

&& chmod +x /usr/local/bin/docker \

&& rm -rf /tmp/*

All in one step so you don’t bloat your dockerfile. This keeps only the docker client binary and drops all of the rest of the cruft out of the package. Because it’s being pulled from Tar, you’re not going to get any missing library issues since it’ll be statically linked. Of further benefit: This works in any OS, not just Ubuntu…meaning if you ever change the base OS your Jenkins build is built off of (or have a corporate standard and roll your own, or use the alpine version instead of the ubuntu version, or…) you don’t have to change the way you run Jenkins, Docker or the client utilities.

As a second recommendation, if security is a thing for you, I REALLY recommend using the TCP Network API, secured through TLS, and just baking the certificates into your Jenkins installation. You can then set “ENV DOCKER_HOST=tcp://docker_host:2376” in your dockerfile. Why? The /var/lib/docker/docker.sock is pretty much root access to your entire docker cluster…not just the server that socket file is running on, but if you’re running swarm it gives you access to ALL systems on the ENTIRE swarm. If any server is broken or a container is compromised with the socket available the attacker has access to your entire swarm given they’re smart enough to work around docker’s rather basic scheduler.

With the network API enabled and TLS Secured the ONLY attack vector for your swarm becomes the Jenkins container itself…because it’s the only thing that has access to the TLS Certificates to access the Network API…and users can’t start another container with that same access (easily at least) as they can’t start a new container through the host with the certs in them.

In MOST installations, admins will keep the socket available and turn on the Network API as a second access point, however in a REALLY secure installation, you can do the reverse and even keep the root account on the command line from accessing the swarm itself (without reconfiguration/restart of Docker). While we don’t do this often, I have found this to be an excellent way to keep a development team with login access (for other reasons) from being able to start/stop containers other than through an approved UI such as jenkins jobs. YMMV.

and you can script from there.

and you can script from there.